How To Install Stable Diffusion On Windows 10

French writer and philosopher Voltaire once said that "originality is nil but judicious simulated" and when it comes to the utilise of artificial intelligence, he'south admittedly correct.

Using a wealth of complex math, powerful supercomputers can be used to analyze billions of images and text, creating a numerical map of probabilities between the 2. One such map is called Stable Diffusion and since it fabricated an appearance, it'southward been the subject of wonder, derision, and fervent utilize.

And all-time of all, you tin use it, also, cheers to our detailed guide on how to apply Stable Diffusion to generate AI images and more as well!

What exactly is Stable Improvidence?

The brusk answer is that Stable Improvidence is a deep learning algorithm that uses text as an input to create a rendered prototype. The longer answer is... complex... to say the least, only it all involves a agglomeration of estimator-based neural networks that have been trained with selected datasets from the LAION-5B project -- a drove of five billion images and an associated descriptive caption. The finish result is something that, when given a few words, the machine learning model calculates and and then renders the most probable prototype that all-time fits them.

Stable Diffusion is unusual in its field because the developers (a collaboration between Stability AI, the Estimator Vision & Learning Group at LMU Munich, and Rail AI) made the source code and the model weights publicly available. Model weights are essentially a very large information array that controls how much the input affects the output.

There are two major releases of Stable Diffusion -- version 1 and version two. The main differences lie in the datasets used to train the models and the text encoder. In that location are four primary models bachelor for version 1:

- SD v1.1 = created from 237,000 training steps at a resolution of 256 x 256, using the laion2b-en subset of LAION-5B (ii.3 billion images with English descriptions), followed past 194,000 training steps at a resolution of 512 x 512 using the laion-high-resolution subset (0.2b images with resolutions greater than 1024 x 1024).

- SD v1.2 = additional training of SD v1.ane with 515,000 steps at 512 10 512 using the laion-improved-aesthetics subset of laion2B-en, adjusted to select images with higher aesthetics and those without watermarks

- SD v1.3 = further preparation of SD v1.2 using around 200k steps, at 512 x 512, of the same dataset as to a higher place, but with some additional math going on behind the scenes

- SD v1.iv = another circular of 225k steps of SD v1.three

For version ii, all of the datasets and neural networks used were open up-source and differed in paradigm content.

The update wasn't without criticism but it can produce superior results -- the base model tin be used to make images that are 768 x 768 in size (compared to 512 x 512 in v1) and there's even a model for making 2k images.

When getting started in AI image generation, though, information technology doesn't matter what model you utilize. With the right hardware, a bit of computing knowledge, and plenty of spare time to explore everything, anyone can download all the relevant files and get stuck in.

Getting started with AI paradigm creation

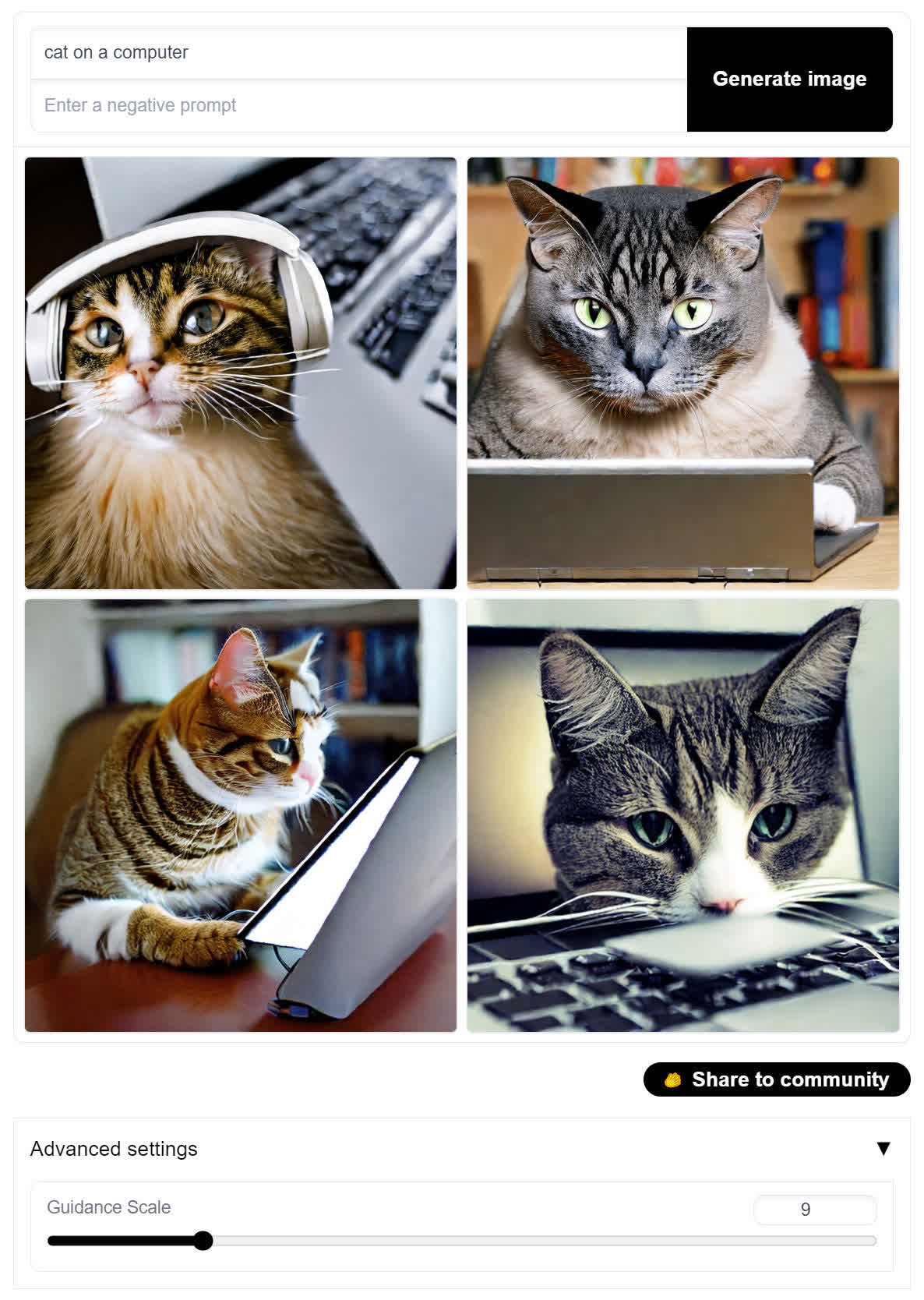

If you want a quick and unproblematic go at using Stable Diffusion without getting your hands dirty, you tin try a demo of information technology here.

You have two text fields to complete: the first is a positive prompt that tell the algorithm to focus on those input words. The 2d, a negative prompt, tells the algorithm to remove such items from the paradigm information technology is about to generate.

There'due south 1 additional affair you lot can alter in this simple demo. Under the Advanced Options, the higher guidance scale is, the more rigidly the algorithm will stick to the input words. Ready this too high and you lot'll terminate upwardly with an unholy mess, only it's still worth experimenting to see what y'all tin can become.

The demo is rather limited and slow because the calculations are beingness done on a server. If you want more control over the output, and then you'll need to download everything onto your own machine. Then let's exercise simply that!

One-click installers for Windows and a macOS alternative

While this commodity is based on a more involved installation process of the Stable Diffusion webUI projection (next section beneath) and we hateful to explicate the bones tools at your disposal (don't miss the section nearly prompts and samples!), the SD customs is rapidly evolving and an easier installation method is one of those things desired by nigh.

We have three potential installation shortcuts:

Correct before we published this commodity we discovered Reddit's word about A1111's Stable Diffusion WebUI Piece of cake Installer, which automates most of the download/installation steps below. If it works for you, great. If non, the manual process is non so bad.

There'southward a second fantabulous project chosen NMKD Stable Diffusion GUI which means to do the aforementioned, but information technology's all contained in a single downloadable package that works like a portable app. Just unpack and run. We tried it and it worked beautifully. NMKD's project is also i of the few to back up AMD GPUs (still experimental) -- thanks for the tip Tinckerbel.

For MacOS users, there's too an easy install pick and app chosen DiffusionBee which works swell with Apple tree processors (works a tad slower with Intel chips). It requires macOS 12.v.1 or higher.

Installing Stable Improvidence on Windows

Allow'south begin with an of import caveat -- Stable Diffusion was initially developed to be processed on Nvidia GPUs and you'll need one with at least 4GB of VRAM, though it performs a lot meliorate with double that figure. Due to the open-source nature of the project, information technology can be made to piece of work on AMD GPUs, though it'south not as easy to setup and it won't run as fast; we'll tackle this later in the article.

For now, head over to the Stable Improvidence webUI project on GitHub. This is a work-in-progress organisation that manages nearly of the relevant downloads and instructions and neatly wraps it all up in a browser-based interface.

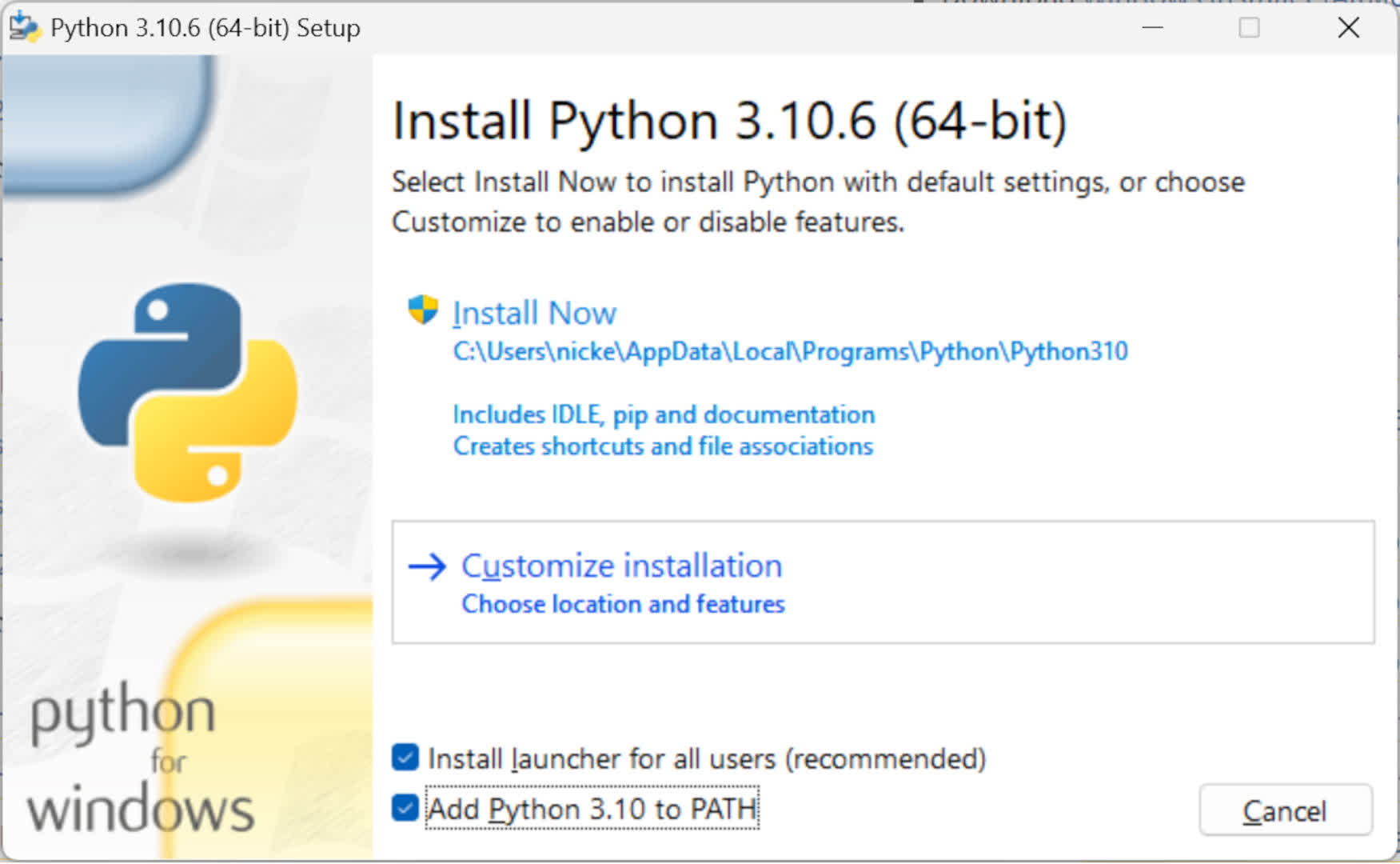

Step 1 -- Install Python

The first footstep is to download and install Python 3.10.half-dozen.

When you install Python, brand certain y'all check the Add to Path option. Everything else can remain in the default settings.

If you lot've already got versions of Python installed, and you don't need to apply them, and then uninstall those first. Otherwise, create a new user account, with admin rights, and switch to that user before installing v3.10.6 -- this will assistance to prevent the organization from getting confused over what Python it's supposed to utilize.

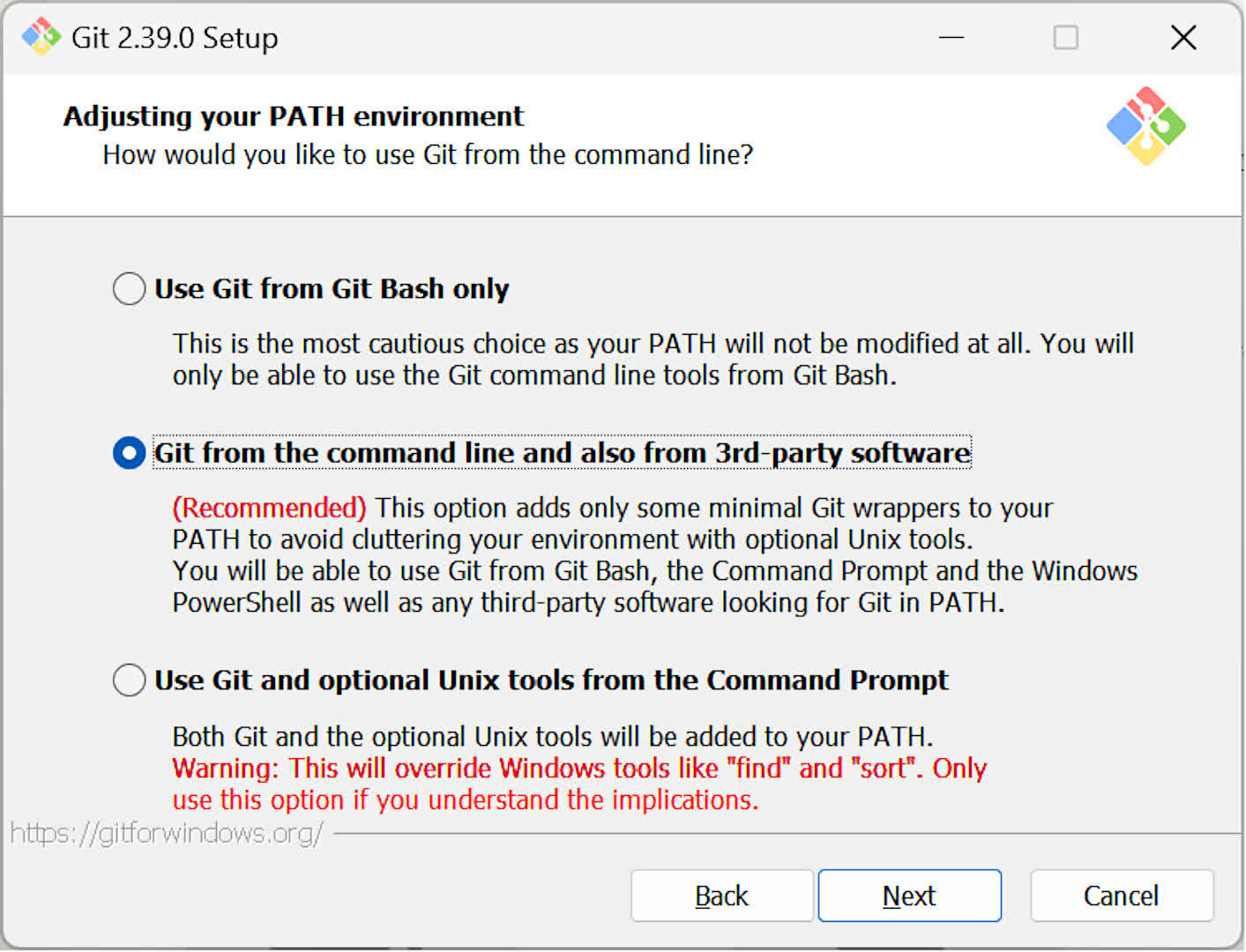

Step two -- Install Git

The next thing to practice is installing Git -- this will automate collecting all of the files yous demand from GitHub. You tin can exit all of the installation options in the default settings, but one that is worth checking is the path environment one.

Make certain that this is prepare to Git from the control line, as we'll be using this to install all of the goodies nosotros demand.

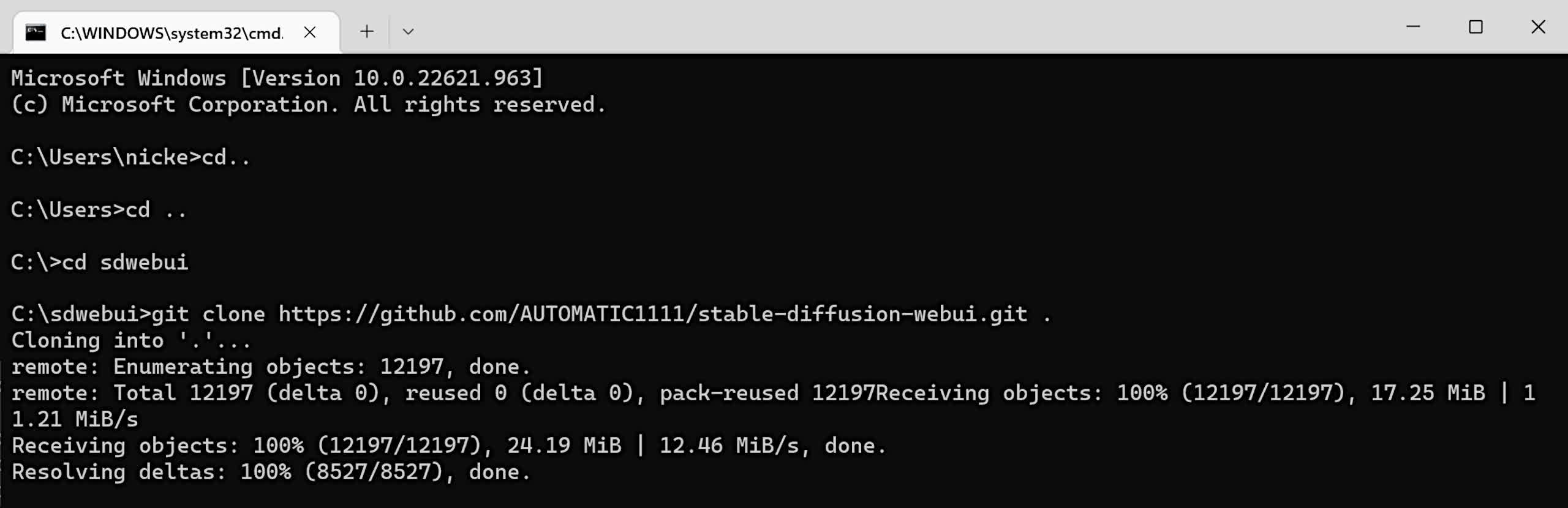

Step 3 -- Copy Stable Diffusion webUI from GitHub

With Git on your figurer, employ it copy beyond the setup files for Stable Diffusion webUI.

- Create a folder in the root of any drive (e.g. C:/) -- and proper name the folder "sdwebui"

- Press Windows key + R, type in cmd, to open the classic command prompt

- Enter cd \ then type in cd sdwebui

- And then type: git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git . and press Enter

There'southward supposed to be a space between the concluding t and the period -- this stops Git from creating an extra folder to navigate, every time you want to use Stable Diffusion webUI.

Depending on how fast your net connexion is, y'all should see a whole stack of folders and files. Ignore them for the moment, every bit nosotros need to get one or ii more things.

Step 4 -- Download the Stable Diffusion model

Choosing the right model tin can be catchy. There are four primary models available for SD v1 and two for SD v2, but there's a whole host of extra ones, too. We're just going to utilise v1.4 because it'due south had lots of grooming and it was the one we had the most stability with on our exam PC.

Stable Improvidence webUI supports multiple models, so every bit long equally you have the correct model, you're free to explore.

- Models for Stable Improvidence v1

- Models for Stable Diffusion v2

The file you want ends with .ckpt but you'll notice that at that place are ii (e.yard. sd-v1-1.ckpt and sd-v1-ane-full-ema.ckpt) -- use the start one, non the full-ema 1.

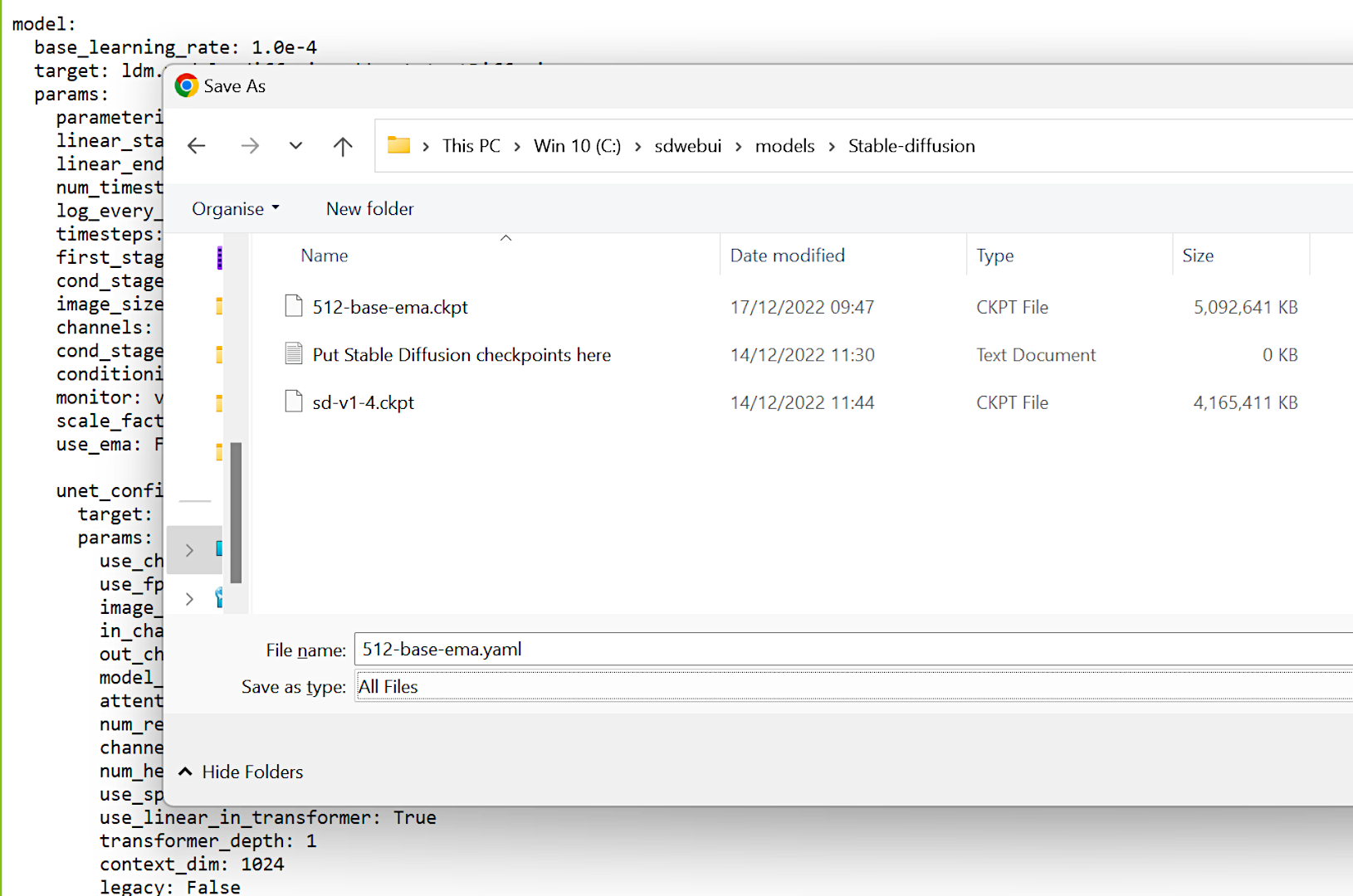

The model files are large (over 4GB), and then that volition take a while to download. Once yous have information technology, move the file to C:\sdwebui\models\Stable-diffusion binder -- or to the binder yous created to business firm Stable Diffusion webUI.

Note that if you're planning to use Stable Improvidence v2 models, you'll demand to add a configuration file in the above folder.

Y'all can find them for SD webUI hither (ringlet down to the bottom of the folio) -- click on the one you demand to apply, so printing CTRL+S, alter the Save as Type to All files, and enter the proper name then that it's the same as the model you lot're using. Finally, ensure that the proper noun ends with the .yaml format.

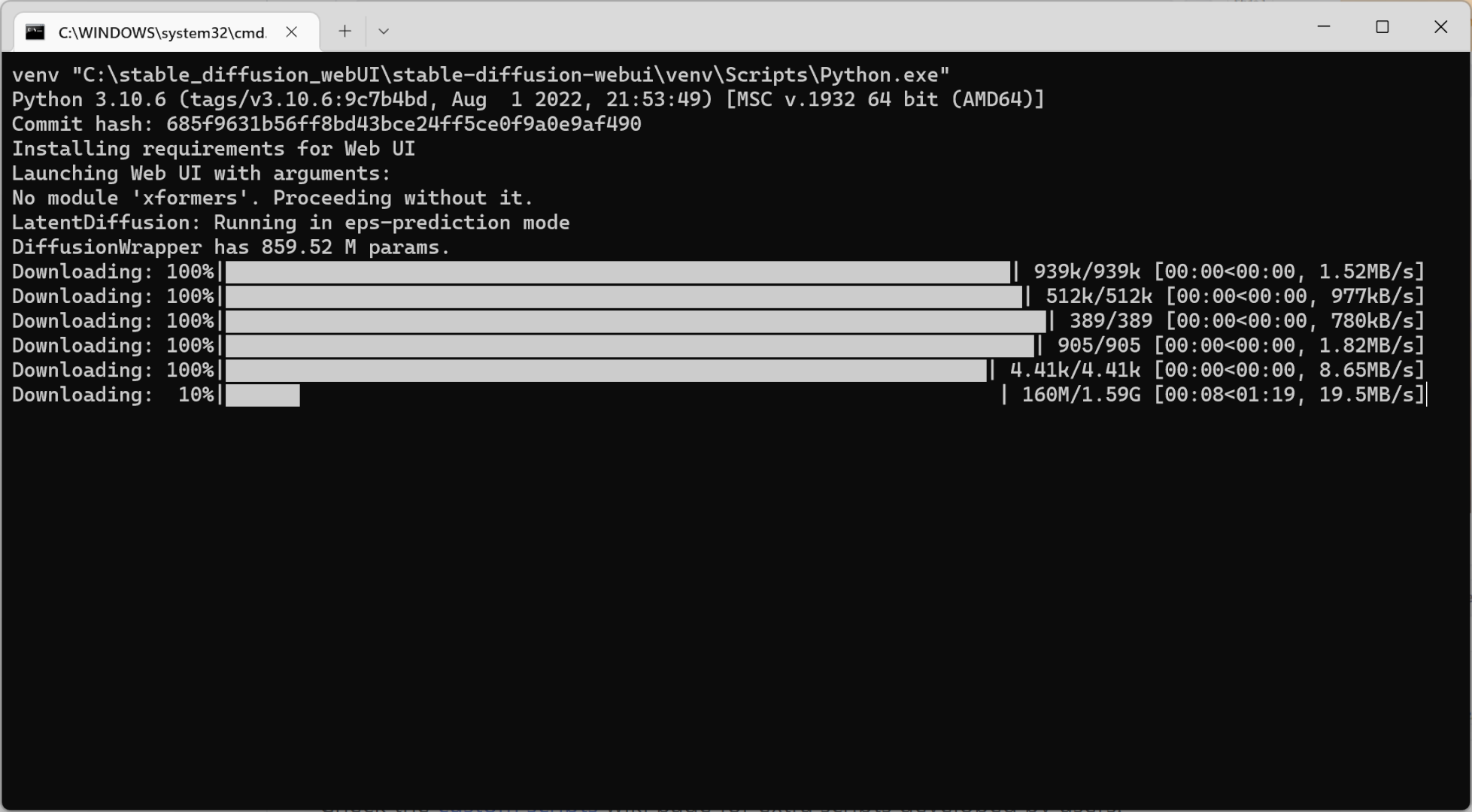

Step five -- Running Stable Improvidence webUI for the showtime time

You're nearly done!

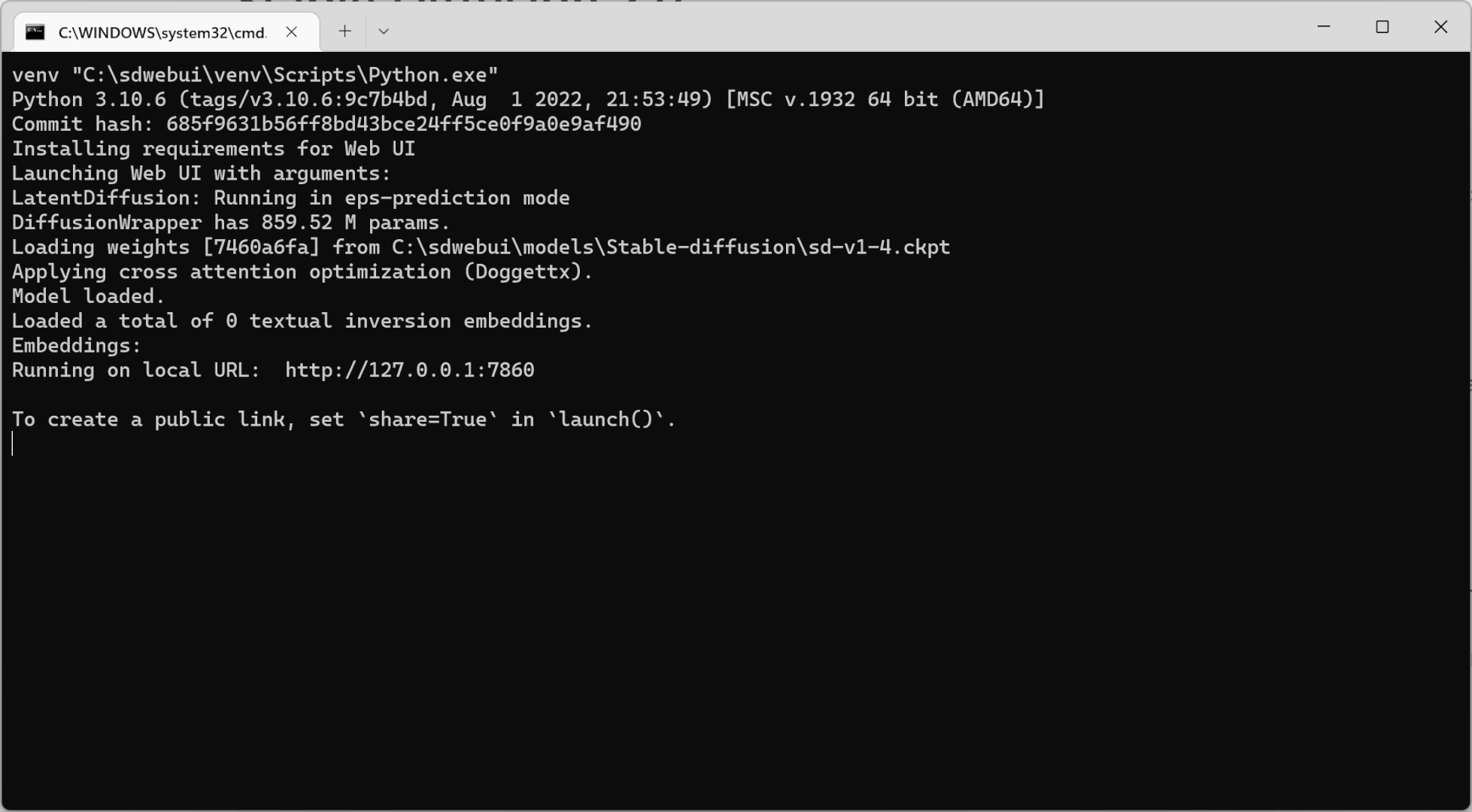

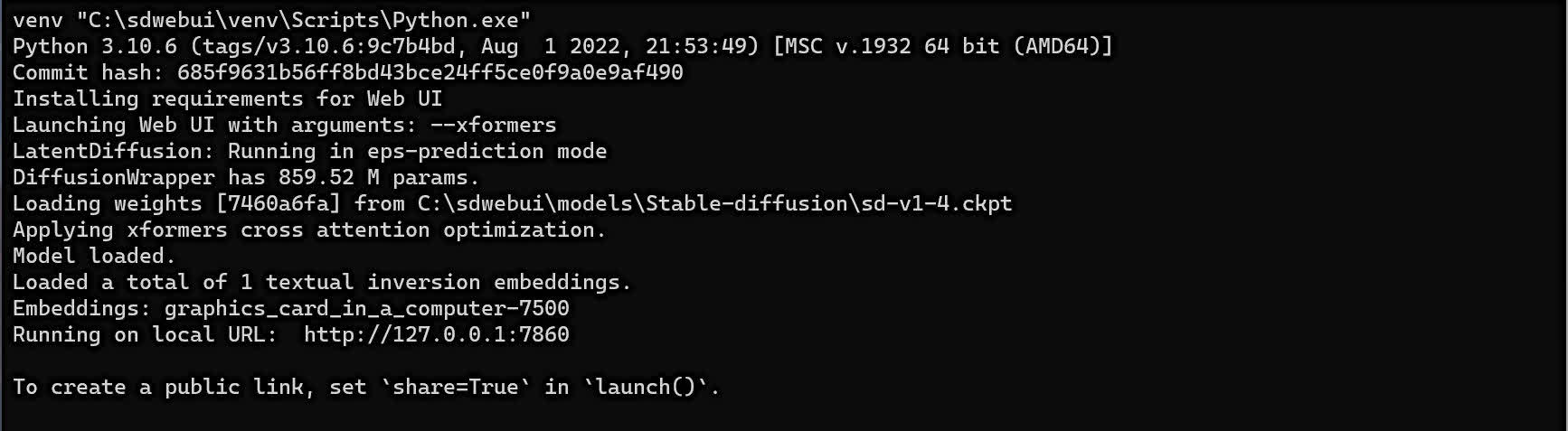

To first everything off, double-click on the Windows batch file labeled webui.bat in the sdwebui folder. A command prompt window will open up up, and then the system will begin to retrieve all the other files needed.

This can take a long time and frequently shows petty sign of progress, so be patient.

You lot'll demand to run the webui.bat file every time you want to use Stable Diffusion, but information technology will burn up much quicker in the future, as information technology volition already take the files information technology needs.

The process will always cease with the aforementioned terminal few lines in the command window. Pay attention to the URL it provides -- this is the address y'all volition enter into a web browser (Chrome, Firefox, etc.) to employ Stable Improvidence webUI.

Highlight and copy this IP address, open up up a browser, paste the link into the address field, press Enter, and bingo!

You're fix to beginning making some AI-generated images.

Going all AI Bob Ross

When Stable Diffusion webUI (or SDWUI for brusk) is running, there will be ii windows open -- a command window and the browser tab. The browser is where you will enter inputs and outputs, so when you're done and what to shut downwards SD, make sure you close down both windows.

You'll notice there'southward an enormous raft of options at your fingertips. Fortunately, y'all can become started immediately, so let'southward begin by adding some words to the Prompt field, starting with the 'How-do-you-do Earth' equivalent of AI-imagery:

"astronaut on a equus caballus"

If yous leave the Seed value at -1, it will randomize the generation starting point each time, so we're going to use a value of ane but y'all test out whatsoever value you like.

Now, just click the big Generate push button!

Depending on your system's specs, you'll have your first AI-generated image within a minute or so...

SDWUI stores all generated images in the \outputs\txt2img-images folder. Don't worry that the image appears and so small. Well, it is minor -- just 512 ten 512, but since the model was generally trained on images that size, this is why the program defaults to this resolution.

But as you can come across, we definitely have an image of an astronaut riding a horse. Stable Diffusion is very sensitive to prompt words and if you want to focus on specific things, add the words to the prompt using a comma. You lot tin also add together words to the Negative Prompt to tell information technology to attempt and ignore sure aspects.

Increasing the sampling steps tends to give better results, as can using a different sampling method. Lastly, the value of the CFG Scale tells SDWUI how much to 'obey' the prompts entered -- the lower the value, the more liberal its estimation of the instructions will be. Then let'southward take another go and see if we can do meliorate.

This time, our prompt words were 'astronaut on a horse, nasa, realistic' and nosotros added 'painting, cartoon, surreal' to the negative prompt field; we also increased the sampling steps to xl, used the DDIM method, and raised the CFG scale to nine...

It'south arguably a lot better now, just still far from being perfect. The equus caballus'due south legs and the astronaut's hands don't look right, for a outset. Just with further experimentation using prompt and negative prompt words, too as the number of steps and scale value, you can somewhen reach something that you're happy with.

The Restore Faces option helps to improve the quality of any human faces you're expecting to run across in the output, and enabling Highres Fix gives you lot admission to further controls, such as the amount of denoising that gets applied. With a few more prompts and some additional tweaks, we present you with our finest creation.

Using a dissimilar seed tin can also help become you the image you're afterward, and then experiment away!

Stable Diffusion can be used to create all kinds of themes and art styles, from fantasy landscapes and vibrant city scenes to realistic animals and comical impressions.

With multiple models to download and explore, there's a wealth of content that can be created and while it can be argued that the use of Stable Improvidence isn't the aforementioned every bit actual art or media creation, information technology tin be a lot of fun to play with.

Impressive "Creations": It's all about the input text

So you say, I take finally perfected this astronaut and no good prompts come to mind well-nigh generating something that looks as good as the illustrations circulating online. Turns out, nosotros were able to create much better images once nosotros understood how important (and detailed) the text input was.

Tip: In SDWUI, there is a paint palette icon correct side by side to the generate button that will input random artists names. Play around with this to create unlike takes and styles of the same prompts.

Tip 2: There are already enough of resources for prompts and galleries out there.

Hither are some good examples plant online and their corresponding prompts to requite you an thought:

Cute post apocalyptic portrait

Prompt: A total portrait of a beautiful post apocalyptic offworld nanotechnician, intricate, elegant, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by Krenz Cushart and Artem Demura and alphonse mucha

Cat Knight

Prompt (source): kneeling cat knight, portrait, finely detailed armor, intricate design, silver, silk, cinematic lighting, 4k

Space Fantasy

Prompt (source): Guineapig's ultra realistic detailed floating in universe suits floating in space, nubela, warmhole, beautiful stars, four k, 8 m, past simon stalenhag, frank frazetta, greg rutkowski, beeple, yoko taro, christian macnevin, beeple, wlop and krenz cushart, ballsy fantasy character art, volumetric outdoor lighting, midday, high fantasy, cgsociety, cheerful colours, full length, exquisite detail, mail service @ - processing, masterpiece, cinematic

Old Harbour

Prompt (source): old harbour, tone mapped, shiny, intricate, cinematic lighting, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by terry moore and greg rutkowski and alphonse mucha

Asian warrior

Prompt (source): portrait photograph of a asia old warrior primary, tribal panther make upward, blue on red, side contour, looking abroad, serious optics, 50mm portrait photography, hard rim lighting photography–beta –ar two:3 –beta –upbeta –upbeta

Kayaking

Prompt (source): A kayak in a river. blue h2o, atmospheric lighting. by makoto shinkai, stanley artgerm lau, wlop, rossdraws, james jean, andrei riabovitchev, marc simonetti, krenz cushart, sakimichan, d & d trending on artstation, digital art.

Magical flying domestic dog

Prompt (source): a cute magical flying domestic dog, fantasy art drawn by disney concept artists, aureate colour, high quality, highly detailed, elegant, abrupt focus, concept fine art, character concepts, digital painting, mystery, adventure

Upscaling with Stable Diffusion: Bigger is better but non always easy

So what else tin can you do with Stable Diffusion? Other than having fun making pictures from words, it can too be used to upscale pictures to a higher resolution, restore or fix images past removing unwanted areas, and fifty-fifty extend a picture across its original frame borders.

Past switching to the img2img tab in SDWUI, we can use the AI algorithm to upscale a low-resolution image.

The training models were primarily developed using very pocket-sized pictures, with 1:i attribute ratios, so if y'all're planning on upscaling something that'due south 1920 ten 1080 in size, for example, then you might think you're out of luck.

Fortunately, SDWUI has a solution for you. At the bottom of the img2img tab, there is a Script drop-down menu where you can select SD Upscale. This script will break the image up into multiple 512 ten 512 tiles and use another AI algorithm (e.g. ESRGAN) to upscale them. The program then uses Stable Diffusion to improve the results of the larger tiles, before stitching everything back into a single image.

Here's a screenshot taken from Cyberpunk 2077, with a 1366x768 in-game resolution. As you can meet, it doesn't look super bad, only the text is somewhat difficult to read, so let'southward run it through the procedure using ESRGAN_4x to upscale each tile, followed by Stable Diffusion processing to tidy them up.

We used 80 sampling steps, the Euler-a sampling method, 512 x 512 tiles with an overlap of 32 pixels, and a denoising scale of 0.1 as so not to remove besides much fine item.

Information technology'south non a great upshot, unfortunately, as many textures accept been blurred or darkened. The biggest issue is the affect on the text elements in the original image, as they're clearly worse after all that neural network number crunching.

If we use an image editing program like GIMP to upscale (using the default bicubic interpolation) the original screenshot by a factor of 2, we can easily see merely how effective the AI method has been.

Yes, everything is now blurry, but at to the lowest degree you tin can easily selection out all of the digits and messages displayed. But we're existence somewhat unfair to SDWUI hither, as it takes time and multiple runs to find the perfect settings -- there's no quick solution to this, unfortunately.

Another aspect that the system is struggling with is the fact that motion picture contains multiple visual elements: text, numbers, heaven, buildings, people, and so on. While the AI model was trained on billions of images, relatively few of them will be exactly like this screenshot.

So let'due south try a different image, something that contains few elements. We've taken a low-resolution (320 x 200) photo of a cat and below are ii 4x upscales -- the left was washed in GIMP, with no interpolation used, and on the right, is the effect of 150 sampling steps, Euler-a, 128 pixel overlap, and a very low denoising value.

While the AI-upscaled image appears a tad more pixelated than the other picture show, particularly around the ears; the lower part of the next isn't too nifty either. Only with more time, and further experimentation of the dozens of parameters SDWUI offers for running the algorithm, better results could be achieved. You can also try a unlike SD model, such as x4-upscaling-ema, which should requite superior results when aiming for very large last images.

Removing/adding elements: Say hello, wave goodbye

Two more tricks you lot can do with Stable Diffusion are inpainting and outpainting -- let'south kickoff with the former.

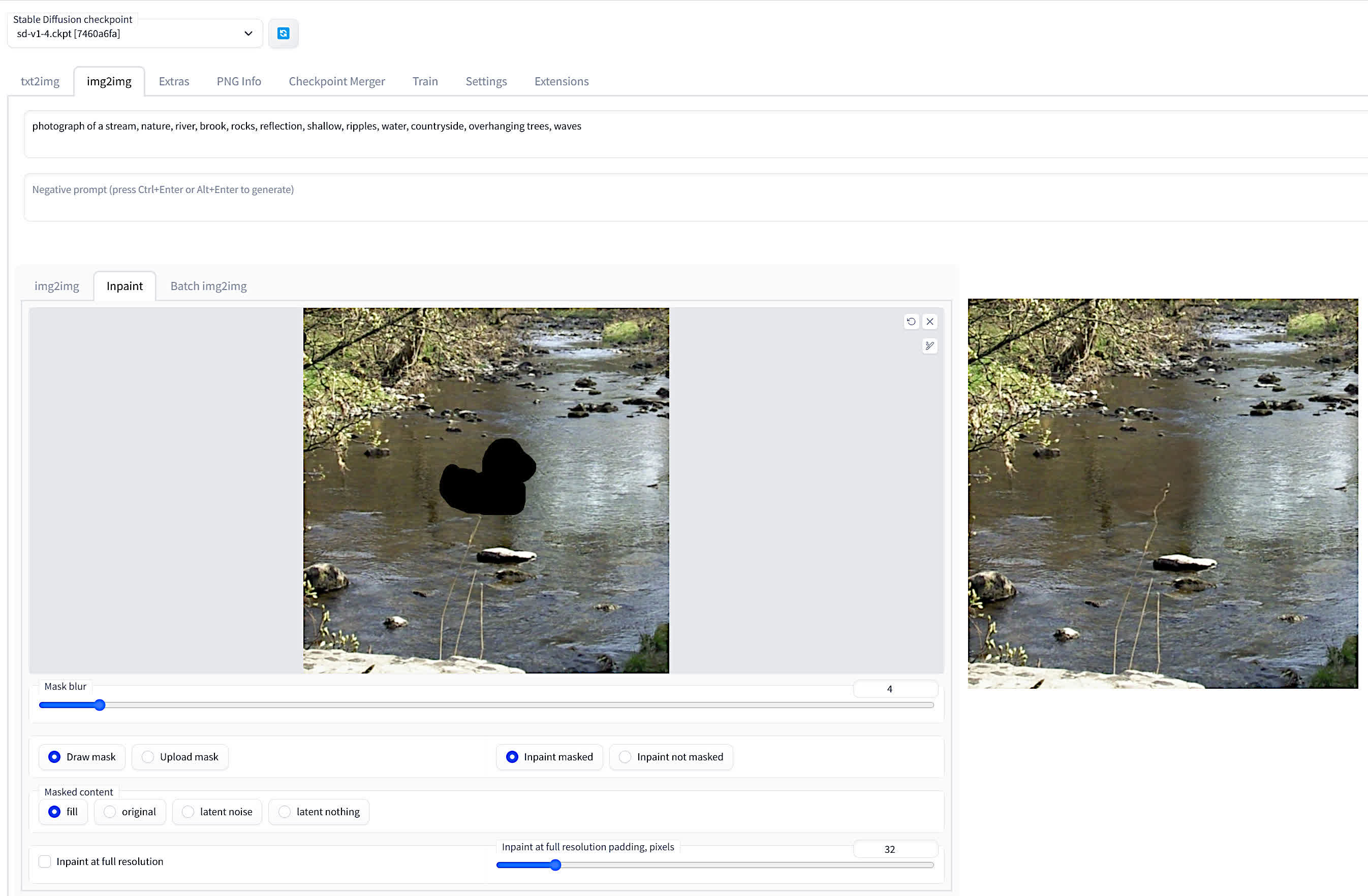

Inpainting involves removing a selected area from an image then filling in that space with what should be there if the object wasn't present. The feature is institute in the main img2img tab, and then selecting the Inpaint sub-tab.

To make this work as best as possible, use lots of carefully chosen prompts (experiment with negative ones, too), a high number of sampling steps, and a fairly loftier denoising value.

Nosotros took a photograph of a shallow stream in the countryside, then added a apartment icon of a safe duck to the surface of the water. The default method in SDWUI is to merely the born mask tool, which just colors in the expanse yous want to remove in black.

If y'all expect at the prompts in the screenshot above, you'll come across we include a variety of words that are associated with how the water looks (reflections, ripples, shallow) and included objects that affect this look too (trees, rocks).

Here'due south how the original and inpainted images await, side-by-side:

You tin tell something has been removed and a talented photo editor could probably achieve the same or better, without resorting to the use of AI. Only it's not especially bad and once more, with more than time and tweaks, the end issue could easily exist improved.

Outpainting does a like thing but instead of replacing a masked expanse, it merely extends the original prototype. Stay in the img2img tab and sub-tab, and go to the Scripts menu at the bottom -- select Poor man'south outpainting.

For this to work well, use as many sampling steps as your arrangement/patience tin can cope with, forth with a very high value for the CFG and denoising scales. Also, resist the temptation to expand the image past lots of pixels; beginning low, e.g. 64, and experiment from there.

Taking our image of the stream, minus the rubber duck, we ran it through multiple attempts, adjusting the prompts each fourth dimension. And here's the best we achieved in the time available:

To say it's disappointing would be an understatement. So what'south the problem here? Outpainting is very sensitive to the prompts used, and many hours could easily be spent trying to discover the perfect combination, even if it's very clear in your mind what the image should exist showing.

One manner to help improve the choice of prompts is to utilize the Interrogate Clip button next to the Generate one. The kickoff fourth dimension you utilise this will force SDWUI to download a bunch of large files, so information technology won't immediately, but in one case everything has been captured, the system will run the epitome through the Prune neural network to give you the prompts that the encoder deems are the best fit.

In our case, information technology gave u.s. the phrase "a river running through a forest filled with trees and rocks on a sunny day with no leaves on the trees, by Alexander Milne Calder." Calder, a US sculptor from the last century, definitely wasn't involved in the taking of the photo merely simply using the residue as the prompt for the outpainting gave us this:

See how much amend it is? The lack of focus and concealment are still problems, merely the content that's generated is very good. What this all shows, though, is very articulate -- Stable Improvidence is merely as proficient as the prompts y'all employ.

Training your ain model

The first Stable Improvidence model was trained using a very powerful computer, packed with several hundred Nvidia A100 GPUs, running for hundreds of hours. And so yous might be surprised to acquire that y'all can practise your own training on a decent PC.

If you use SDWUI and prompt information technology "graphics card in a computer," you won't get anything really like information technology -- most results typically only show role of a graphics menu. Information technology'southward hard to say just how many images in the LAION-5B dataset would cover this scenario but it doesn't actually affair, y'all tin adapt a tiny function of the trained model yourself.

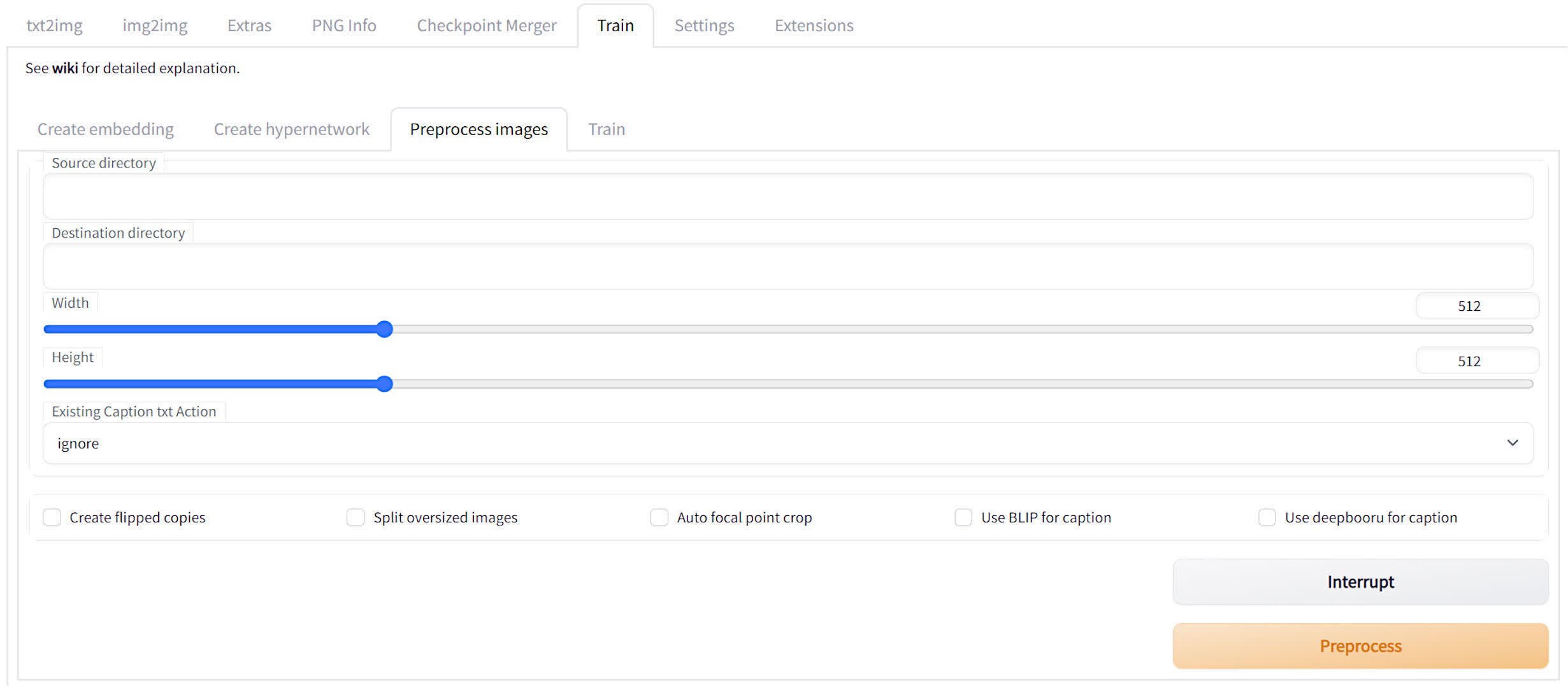

You lot'll need at least 20 images, taken from different angles and so on, and they must all be 512 x 512 in size for Stable Diffusion 1.10 models or 768 x 768 for SD two.x models. You tin either crop the images yourself or use SDWUI to exercise it for you lot.

Become to the Train tab and click on the Preprocess images sub-tab. You'll meet ii fields for folder locations -- the first is where your original images are stored and the second is for where you lot desire the cropped images to be stored. With that info all entered, only striking the Preprocess push and yous're all set to kickoff preparation.Store them in a folder, somewhere on your PC, and brand a note of the binder's address.

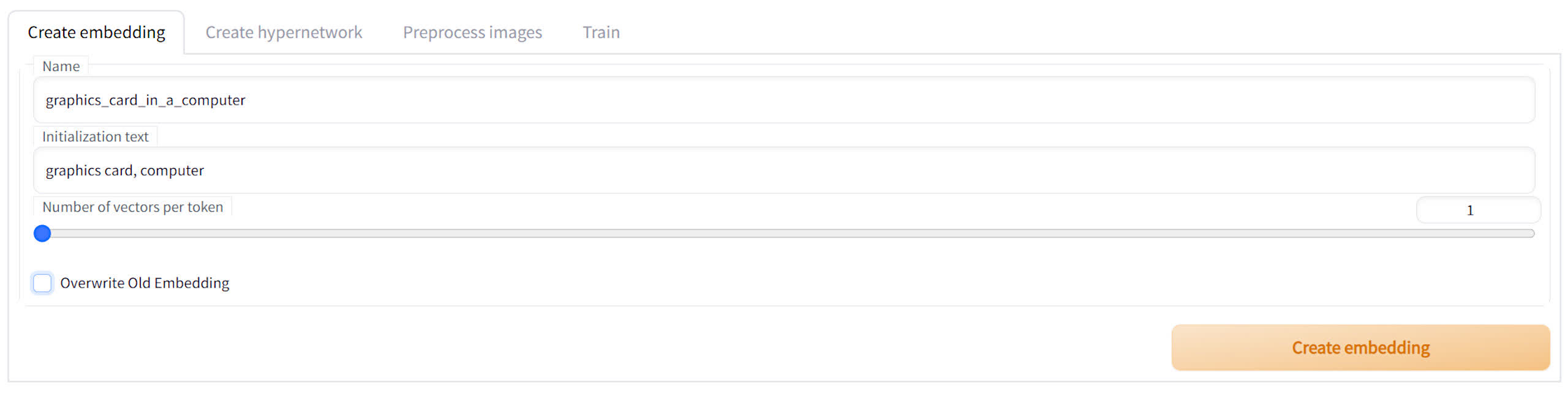

Outset create an embedding file -- click on the Create embedding sub-tab, give the file a name, followed by some initialization text. Pick something simple then information technology will exist like shooting fish in a barrel to call back when yous blazon in prompts for text2img.

So set the number of vectors per token. The college this value is, the more accurate the AI generation will be, merely you'll likewise need increasingly more source images, and information technology will take longer to railroad train the model. Best to employ a value of ane or 2, to begin with.

At present but click the Create embedding push and the file will exist stored in the \sdwebui\embeddings folder.

With the embedding file ready and your drove of images to manus, it's time to start the grooming process, so head on to the Train sub-tab.

There are quite a few sections hither. Starting time past entering the name of the embedding you lot're going to try (information technology should be nowadays in the drop-down menu) and the dataset directory, the binder where you've stored your grooming images.

Next, take a look at the Embedding Learning charge per unit -- higher values give you faster grooming, only set too high and you'll run into all kinds of problems. A value of 0.005 is appropriate if you selected 1 vector per token.

And then change the Prompt template file from style_filewords to subject_filewords and lower the number of Max steps to something below 30,000 (the default value of 100,000 volition go on for many hours). At present y'all're ready to click the Train Embedding button.

This will work your PC hard and accept a long time, so make certain your computer is stable and non needed for the adjacent few hours.

Subsequently near 3 hours, our grooming attempt (washed on an Intel Core i9-9700K, 16GB DDR4-3200, Nvidia RTX 2080 Super) was finished, having worked through a total of thirty images scraped from the web.

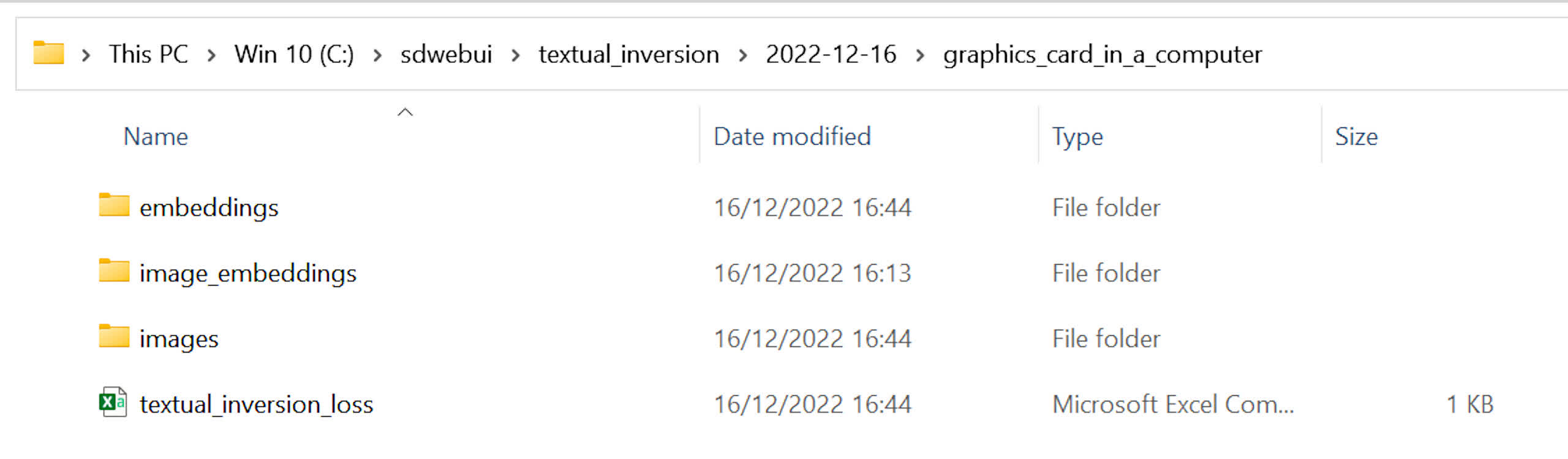

All of the results of the training get stored in the \textural_inversion\ folder and what y'all get are multiple embeddings and an associated paradigm for each ane. Become through the pictures in the images binder and brand a notation of the name of the i that you lot like the near, which wasn't easy in our case.

Most of them are pretty awful and that's down to two things -- the quality of the source images for the training and the number of steps used. It would have been better for united states to accept taken our ain photos of a graphics card in a figurer, so we could ensure that the majority of the picture focused on the bill of fare.

In one case you've chosen the best image, go into the embeddings binder and select the file that has the same name. Re-create and paste it into embeddings folder in the master SDWUI 1. It'due south a good idea to rename the file to something that indicates the number of vectors used if yous're planning on training multiple times.

At present all you need to do is restart SDWUI and your trained embedding will exist automatically included in the AI generation, every bit shown below.

If, like us, you've only done a handful of images or simply trained for a couple of hours, yous may not see much difference in the txt2img output, but you can repeat the whole process, by reusing the embedding you created.

The training you've done tin can e'er be improved by using more images and steps, as well every bit tweaking the vector count.

Tweak 'till the cows come home

Stable Diffusion and the SDWUI interface has so many features that this article could easily exist three times as long to embrace them all. You tin check out an overview of them here, but it's surprisingly fun simply exploring the diverse functions yourself.

The aforementioned is true for the Settings tab -- there is an enormous corporeality of things yous tin can alter, but for the most part, it's fine to leave them every bit they are.

Stable Improvidence works faster the more than VRAM your graphics card has -- 4GB is the absolute minimum, but there are some parameters that can be used to lower the amount of video memory used, and there are others that will utilise your carte du jour more than efficiently, too.

Batch file settings

Right-click on the webui batch file you employ to commencement SDWUI and click on Create shortcut. Right-click on that file and select Properties. In the Target field, the following parameters can be added to alter how SDWUI performs:

- --xformers = enables the use of the xFormer library which tin essentially improve the speed that images are generated. Only use if you accept an Nvidia graphics card with a Turing or newer GPU

- --medvram = reduces the corporeality of VRAM used, at a cost of processing speed

- --lowvram = significantly reduces the corporeality of VRAM needed but images will exist created a lot slower

- --lowram = stores the stable diffusion weights in VRAM instead of system memory, which will ameliorate performance on graphics cards with huge amounts of VRAM

- --use-cpu = some of the main features of SDWUI volition exist processed on the CPU instead of the GPU, such as Stable Diffusion and ESRGAN. Image generation time will be very long!

At that place are a lot more parameters that can be added, simply don't forget that this is a work-in-progress projection, and they may not e'er part correctly. For example, we found that Interrogate Clip didn't starting time at all, when using the --no-half (prevents FP16 from being used in calculations) parameter.

While we're on the bespeak of GPUs, remember that Stable Diffusion was developed for Nvidia graphics cards. SDWUI can be fabricated to work on AMD GPUs by following this installation procedure. Other implementations for Stable Diffusion are available for AMD graphics cards, and this one for Windows has detailed installation instructions. Yous won't go a UI with that method, so you'll be doing everything via the command prompt.

With well-nigh of the basics covered, you should have enough knowledge to properly dive into the world of AI image generation. Let us know how you get on in the comments below.

Source: https://www.techspot.com/guides/2590-install-stable-diffusion/

0 Response to "How To Install Stable Diffusion On Windows 10"

Post a Comment